If you’re in the digital marketing world, you know how crucial it is to get your website noticed by search engines like Google. But did you ever stop to wonder how those search engines even find your site in the vast expanse of the internet? That’s where crawlability steps in. Understanding crawlability can significantly impact your website’s success.

Simply put, crawlability refers to how easily search engine bots can access and explore your website’s content. These bots, sometimes called “spiders,” scour the web, following links from page to page, much like you would. They gather information about your site’s content to determine what it’s about and where it should rank in search results.

The Connection Between Crawlability and Indexability

Think of crawlability as laying the groundwork for indexability. If crawlers can’t access and understand your website, they definitely can’t add it to their index, which is like a massive library where Google stores information about all the websites it knows about. Indexability means your site has passed the first test and has been officially “cataloged” by search engines.

If a page isn’t crawlable, it can’t be indexed. And when you have indexability issues, you can forget about showing up in Google search results. This is why ensuring your website is crawlable is an essential part of any SEO strategy.

Why Should You Care about Your Website’s Crawlability?

You might be wondering why you should even care about crawlability if indexability is the ultimate goal. But here’s the thing: if Google (or Bing, Yahoo) can’t easily crawl your site, it won’t bother trying to understand your website’s content or, more importantly, where to rank it. You’ll be stuck in internet obscurity, unseen by the vast majority of your target audience.

In other words, good crawlability means higher chances of ranking well and getting those precious clicks to your site. Your website check should always include analyzing crawlability.

Factors Affecting Crawlability: Where to Start

Now that you know the answer to “what is crawlability,” let’s explore the crucial factors that affect a website’s crawlability. It’s important to address any crawlability issues that may be present to ensure that your website can be easily found and indexed by search engines. You can use a site audit or an audit tool to help you identify any issues.

Website Structure:

Imagine walking into a library with books strewn all over the floor, no clear sections, or labels. That’s how search engines feel when faced with a poorly structured website. A logical and organized structure, starting with your homepage and branching out logically, makes it easier for those bots to navigate.

A well-organized website with a clear XML sitemap allows them to discover and index more pages. Plus, a logical website structure not only benefits web crawlers but also makes for happy visitors.

Internal Links:

Think of your internal links like strategically placed signposts, guiding those bots to different areas of your site. By strategically placing internal links throughout your content, you can guide visitors and search engine bots to related pages on your website. They provide valuable context for search engines, helping them understand the relationships between different pages.

A good internal linking structure tells search engines which pages are most important. They also tell search engines what your website is about. Ensuring every page on your website can be easily accessed from at least one other page is critical for improving crawlability. You want to ensure proper internal linking for the best results.

Broken Links and Errors:

Imagine encountering a dead-end while driving. Frustrating, right? Broken links have a similar effect on search engine bots.

They disrupt the natural flow and prevent them from accessing potentially valuable pages. Regularly checking for these broken links or 4xx errors, especially using auditing tools like Screaming Frog, helps keep your website healthy and easy for bots to explore. Screaming Frog can crawl your site and give you a list of broken links so you can fix them.

Robots.txt:

Remember those bouncers I mentioned earlier? Think of your robots.txt file as your website’s bouncer. It’s a set of rules that tell those busy bots which parts of your site they are allowed or not allowed to crawl.

Use it wisely; accidentally blocking important pages can spell disaster for your site’s visibility. This is crucial for managing which pages search engines index. It also specifies rules for how search engine bots should crawl your website.

XML Sitemap:

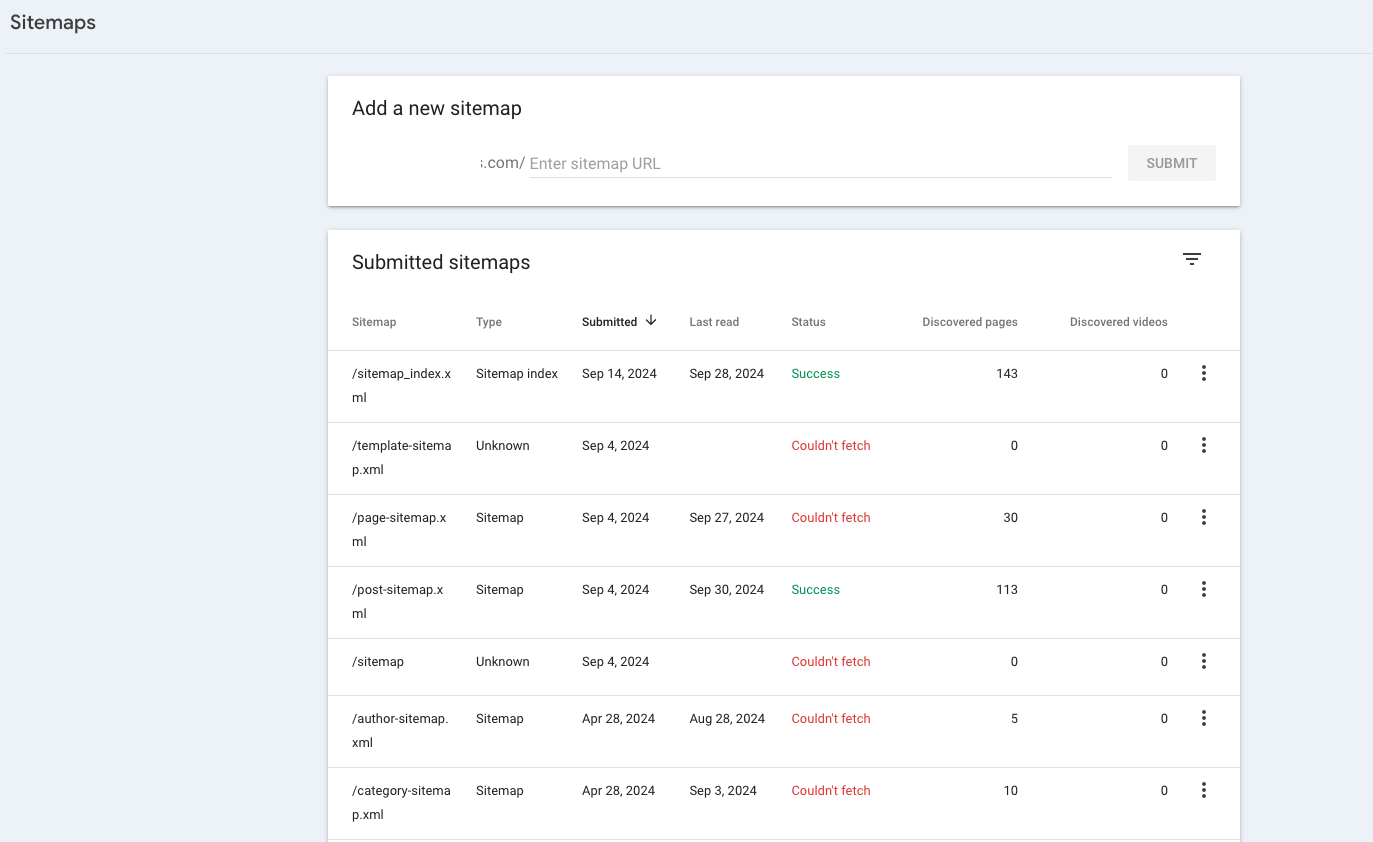

Your sitemap is like providing a blueprint for those web crawlers. This handy XML sitemap lists all essential pages, even those tucked away and potentially overlooked.

By submitting your XML sitemap to Google Search Console, you’re not leaving anything to chance, making sure those key pages are noticed and indexed promptly. You will need to add content regularly for it to be picked up during a Google crawl, so keep that in mind.

Other Essential Factors Impacting Crawlability:

| Factor | Why It Matters |

|---|---|

| Page Speed: | Sluggish pages frustrate everyone, including crawlers. This lag can limit how many pages a bot can index within its crawl budget. |

| Mobile Friendliness: | With Google’s mobile-first indexing, having a mobile-friendly or responsive site isn’t optional, especially for crawlability. Ensure seamless transitions across different screen sizes for those on-the-go users. |

| Redirects: | Redirects guide visitors to the right place when a page is moved or deleted, but too many redirects or redirect loops (where one redirect points to another) can frustrate crawlers and impact your website’s SEO. |

| Server Errors: | These can prevent crawlers from accessing your website altogether. Monitoring these server errors should be a routine task, especially if there are sudden drops in traffic, which could indicate a deeper issue. |

FAQs about What is Crawlability?

What does crawlability mean?

Crawlability refers to a search engine’s ability to access and explore your website to analyze the content. This process is the foundation for getting your pages indexed by search engines. Think of it like this – if a search engine can’t crawl your site, it can’t index it.

Why is crawlability important for SEO?

Crawlability is essential because it directly impacts how well search engines can understand and index your website. The better your crawlability, the better your chances of ranking higher in search engine results pages (SERPs). Good crawlability can lead to more visibility, organic traffic, and ultimately, conversions for your business.

Conclusion

So, what is crawlability in the simplest terms? It’s the bedrock for visibility, ensuring your site is accessible and easily explored by search engine bots, or engine bots, whatever you prefer to call them.

Good crawlability isn’t just a technical SEO tick-box. It directly translates to higher chances of indexing, improved rankings, and increased traffic. It’s a fundamental aspect of SEO, influencing your online visibility and directly affecting your website’s success. Learning to prioritize and improve crawlability of your website is investing in its long-term growth and reach.